Achieving Artificial Intelligence involves creating machines capable of human-like thought processes. This endeavor is predicated on the belief that human cognition can be modeled and replicated in non-human entities. In essence, AI assumes that human thought follows logical patterns that are both understandable and reproducible.

Philosophy, the discipline dedicated to the study of human thought and reasoning, has long sought to formalize these processes. In the 19th century, philosophers endeavored to articulate human reasoning in a formal manner, adhering to specific rules that could potentially be replicated. To achieve this, they sought a universal language capable of describing these rules and formalizing human reasoning. This quest culminated in 1910 with the publication of Principia Mathematica by Alfred North Whitehead and Bertrand Russell, a groundbreaking work that aimed to provide a formal foundation for mathematics and, by extension, human logic.

Principia Mathematica focused on proving the logical foundations of mathematics. In other words, it aimed to demonstrate that the fundamental concepts and reasoning used in mathematics could be defined and derived from pure logic. The book also gave proof that 1+1=2 based on logic rather than asking you to believe it just because maths say so. Using pure logic and believing in logical truth is also one of the fundamentals of human thinking.

With the proof that mathematical reasoning can be formalized, researchers and mathematicians doubled down on applying that reasoning to a machine.

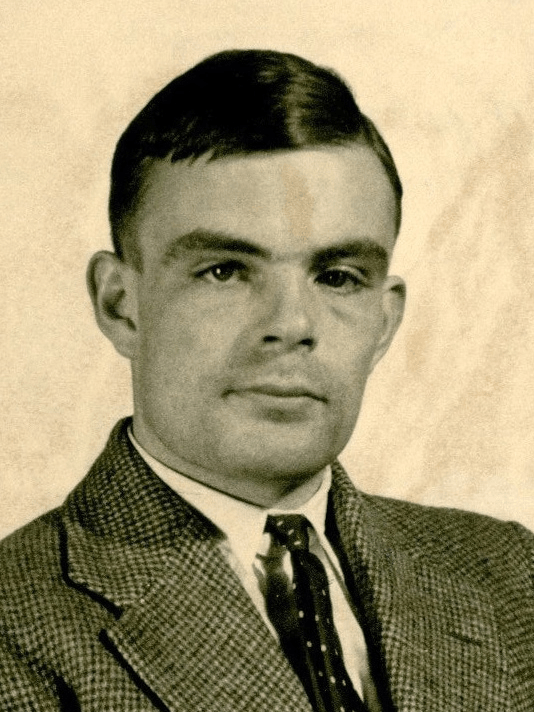

Turing Machine: The First Intelligent Machine

In 1935, Alan Turing proposed a hypothetical machine called Turing Machine which could be used to solve the Decision Problem. Turing presented a machine that could perform any computation if given enough time and memory. This was revolutionary work in the field of mathematical computing, and became the basis of almost all computing machines to this day, ranging from PC to a Microwave. Turing therefore is rightly known as the Father of Computer Science. He also laid down the term of machine being “Intelligent” where he famously quoted:

A computer would deserve to be called intelligent if it could deceive a human into believing that it was human.

This became the fundamental measure of a machine’s ability to exhibit intelligent behavior and is now known as the Turing Test.

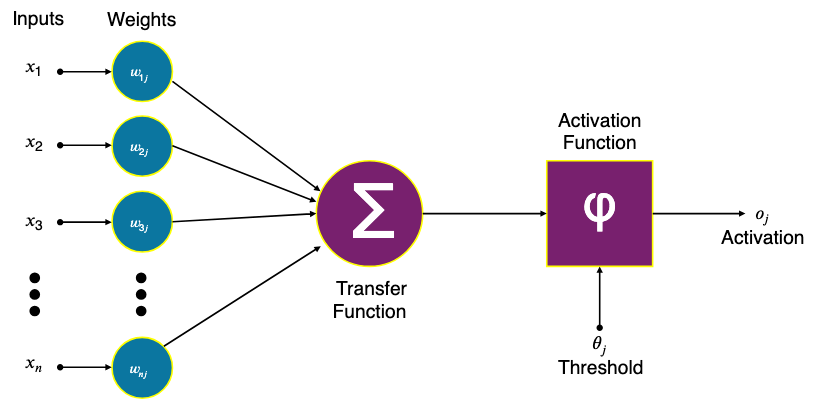

Turing’s landmark work soon gave birth to the concept of Artifical Neurons proposed by Pitts and McCulloch in 1943. An artificial neuron is an independent computing node which is inspired by human biological neuron and is designed to mimc its behavior. Key working of an artificial neuron is:

- Input: The neuron receives input from other neurons or some external source.

- Weighted Summation: Each input carries a weight which is multiplied together and result is summed together for all inputs through a transfer function.

- Activation Function: The above result is passed through an activation function which represents the complexity and bias of human thinking. The activation function can be better described by how two people can come to different conclusions after being provided by the same set of facts and information due to their own thinking irregularities and biases. Activation function mimics that.

- Output: Finally, the output is then sent to other neurons or used as final output of the entire system.

The concept of artificial neurons took the world one step closer to machines that could learn from feedback and this was realized in the form of SNARC in 1951. SNARC (Stochastic Neural Analog Reinforcement Calculator) was built by Marvin Minsky and Dean Edmonds and is considered to be the first artificial neural network and a machine that could learn through reinforcement.

Using vacuum tubes as analog artificial neurons, Minsky designed the system to mimic a rat trying to cross a maze. Every time the machine chose the correct path, positive reinforcement was applied making the machine choose that path more often the next time and hence learning how to solve the maze faster.

This and other poineer work that followed culminated into Dartmouth Conference in 1961 consisting of all the major innovators in the field including John McCarthy who coined the term Artificial Intelligence at the conference and this is widely considered as the point of Birth of AI.

Once the new field of academics called AI was released, there was some impressive work done by various scientists in years to follow. Solutions like General Purpose Solver (GPS) to solve universal problems by mimicking human problem solving, STRIPS systems in autonomous vehicles, ELIZA as the first chatbot system and WABOT project as the first humanoid robot with limbs were invented. This rapid development pulled in a lot of funding from government agencies as the buzz was immense around possibilities of AI.

The AI Winter

All good things must come to an end, and with AI the funding agencies eventually recovered from their infatuation towards the possibilities of these futuristic solutions. The various designs created by many scientists worked great as prototypes but could not perform at scale or have satisfactory precision in real life. Even though AI concepts and solutions were advancing at a great pace, the computational power of hardware was still severely limited at that time, adding to the long list of problems for the researchers. DARPA, the US defense research agency was the major source of funding for researchers in US and had given a massive contract to researchers in Carnegie Mellon University to develop a speech recognition system that pilots could use to command the planes. The result of this project, along with other high profile NLP translation projects was so underwhelming that DARPA pulled all funding. This resulted in people losing interest in AI and an extended period with no progress in the field began due to the funding being halted, a period now known as the AI Winter.

With the funding for projects dried up, most of the researchers went back to the drawing board and focused on theoretical research to refine the performance of AI systems. There was an increased focus on Connectionism which explored the neural networks and how these networks can be made stronger to improve AI. The winter of AI lasted close to a decade and was finally broken by innovative solutions like XCon – world’s first commercial expert system, and development of LISP – a hugely popular language for AI programming. But compute power and hardware capabilities were still severely limited and most of the LISP hardware industry collapsed by early 1990s due to being too expensive to maintain. More than 300 companies shut down and the industry soon went into another AI Winter.

The Second AI Winter

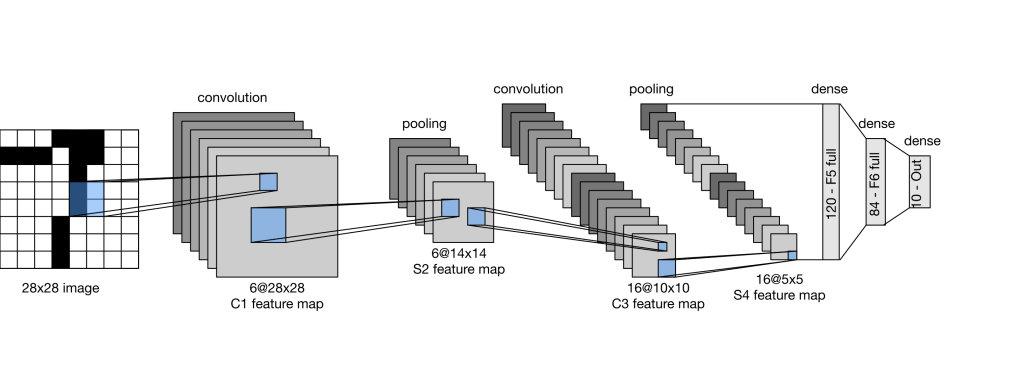

Fortunately, the second AI winter was vastly different than the first. Large behemoths like IBM and Microsoft had identified the value of AI in the industry and understood that cost of creating and managing these systems is the primary prohibitive factor. At the same time, industry had realized the accuracy of Moore’s law and believed that sooner or later computational power will hit that golden mark and achieve economies of scale making development of such AI solutions feasible. Companies also realized that developing niche solutions is the way to go rather than trying to come up with a general AI solution. There was a lot of research and development done by large companies in AI in the field of logistics, search engine, medical diagnosis etc. This development was led by the launch of LeNet-5 in 1998. LeNet-5 was a number identification system which was a commercially viable model and was used by many banks to process millions of cheques everyday.

At the same time, researchers were now vary of using the term “AI” and started using terms like informatics, machine learning, knowledge based systems, cognitive systems etc to give credibility and chance of higher acceptance of their work. Through the slow decade of 1990s and 2000s, there were isolated developments in the field while the age of data was around the corner.

The Age of Big Data

After the year 2000, there was a massive rise in computation power in the world and it coincided with spread of personal devices and smartphones leading to social media and ecommerce explosion. Internet was now accessible at our fingertips and economies of scale in computer production had kicked in and devices were getting more accessible for everyone. The amount of data now being produced by the world jumped multifolds. We had entered the age of big data.

With a massive amount of data available in every field that could be used to train machines, AI made a huge comeback with systems like recommendation system, NLP solutions, Image and Speech recognition systems, geoservices, warehouse automation robotics etc. With the availability of massive data, now the AI model could be trained in more detail giving popularity to Deep Learning in which multiple hidden layers of neurons would help train the machine to reach higher accuracy. Multimodal learning like voice to text, text to image synthesis etc made huge progress in terms of accuracy and precision.

Generative AI

Finally we come to the current poster child of AI: Generative AI. In 2014, Ian Goodfellow introduced the concept of Generative Adversarial Networks (GAN). A GAN is a type of machine learning framework that is designed to generate new data with the same statistics as the training data. A GAN involves two neural networks:

- Generator: This network generates new data instances.

- Discriminator: This network evaluates the generated data and tries to distinguish it from real data.

The two networks are trained in a competitive process. The generator tries to produce data that can fool the discriminator, while the discriminator tries to accurately identify fake data. Over time, both networks improve, leading to the generation of increasingly realistic data.

GANs paved the way for more advanced models like the first GPT model released in 2018 that could generate texts, images and even music. With the rise in deep learning and machine learning specific hardware like GPU, there was rapid progress in fine tuning these GANs with GPT-3 and DALL-E model being released in 2020 and 2021 respectively.

Looking ahead into the future, the potential of generative AI seems limitless. From application in health, media, content creation, arts, coding etc, Gen AI applications are wide spread and we will see improvements in human like responses and beyond from these AI solutions very soon.

Image credits

Cover Image: Photo by BoliviaInteligente on Unsplash

Others: Wikipedia